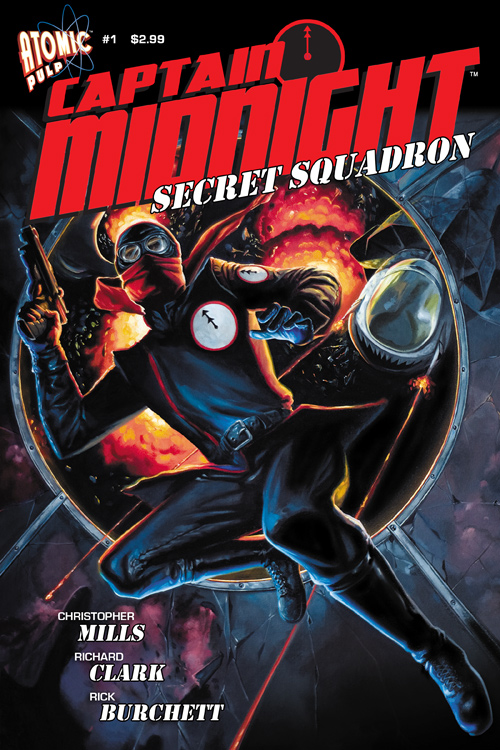

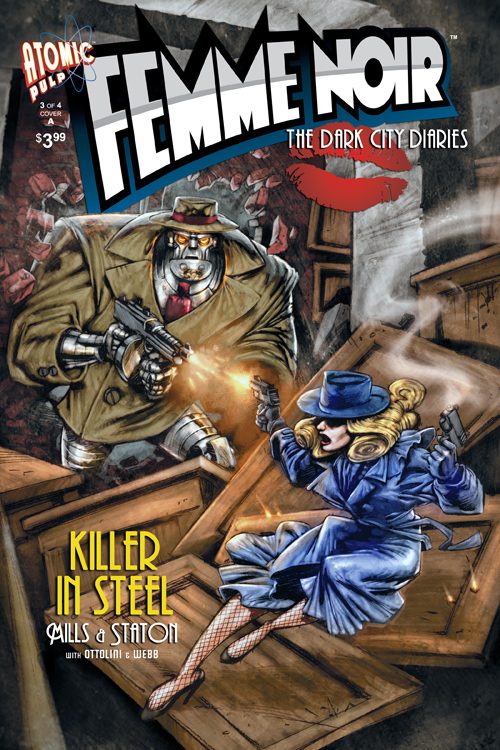

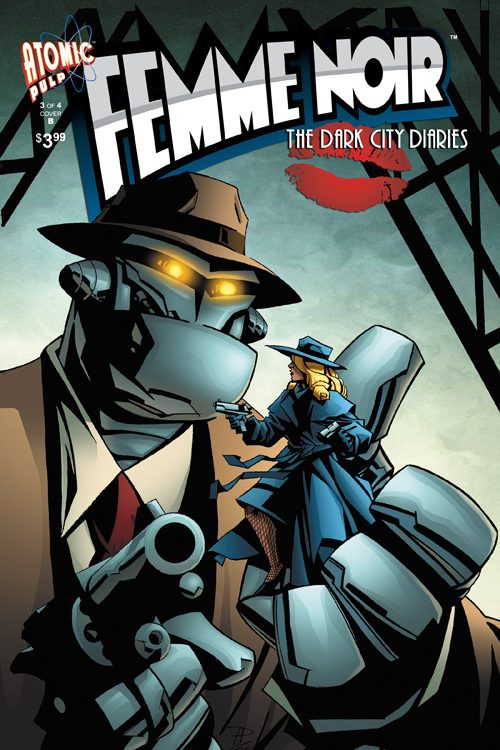

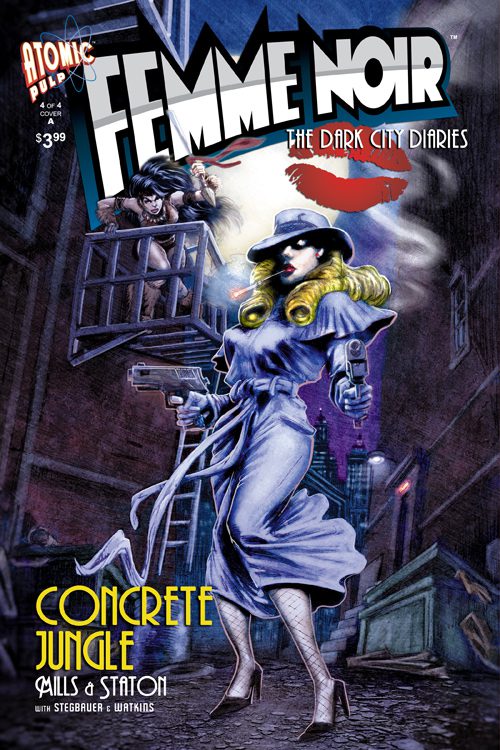

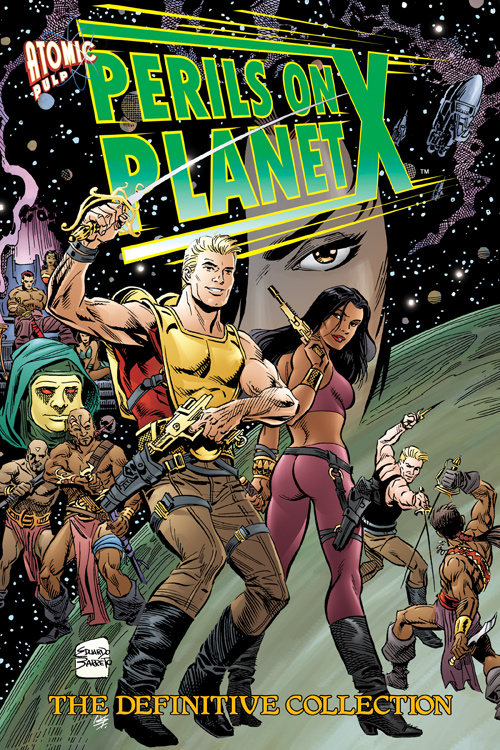

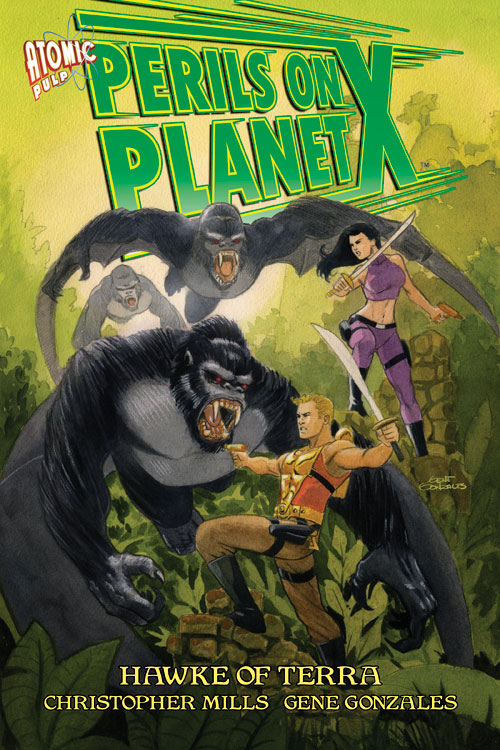

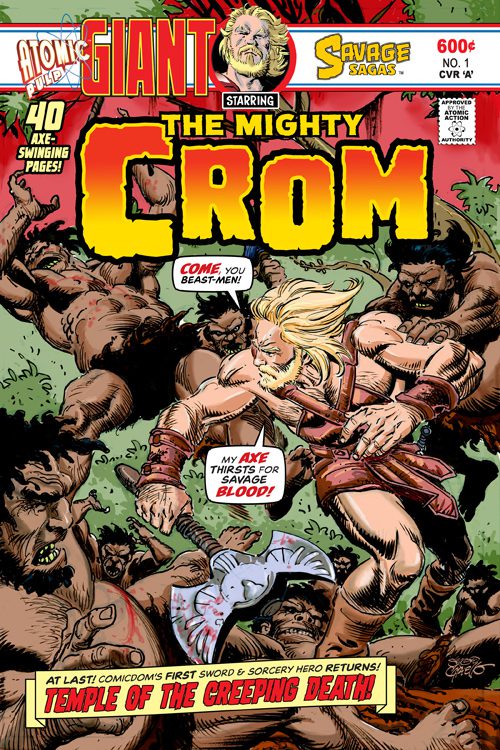

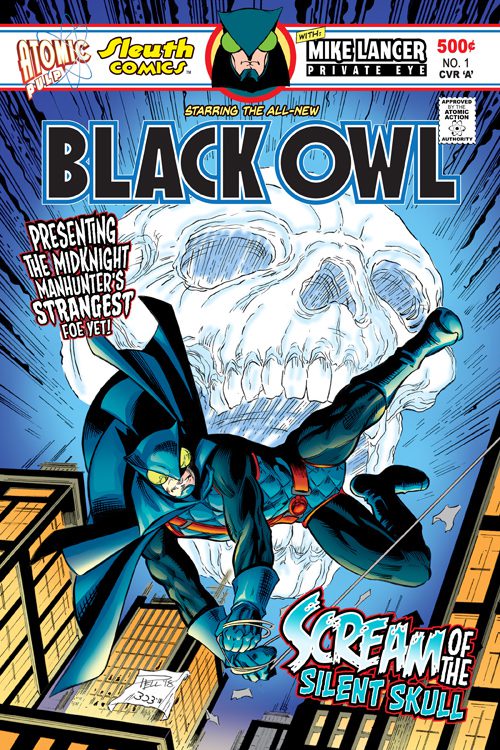

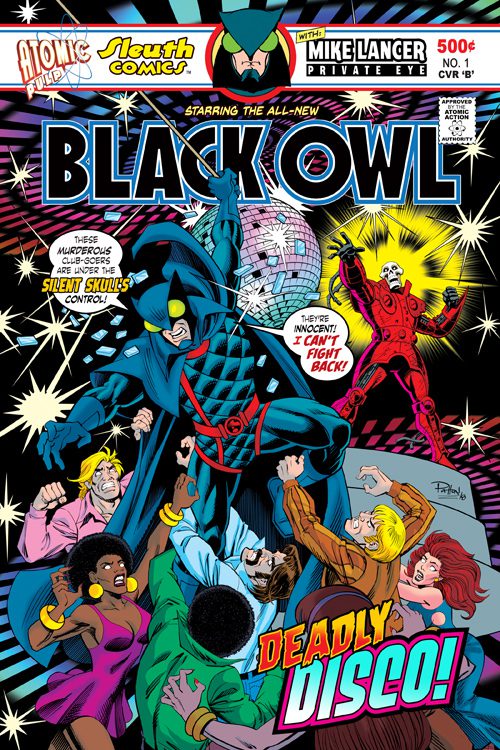

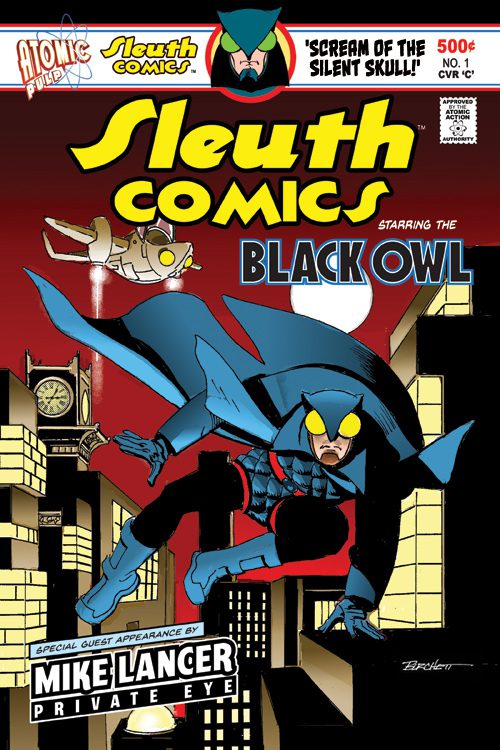

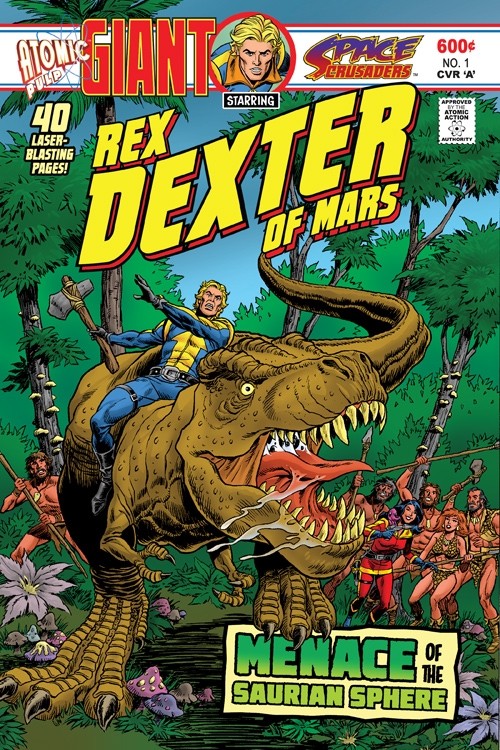

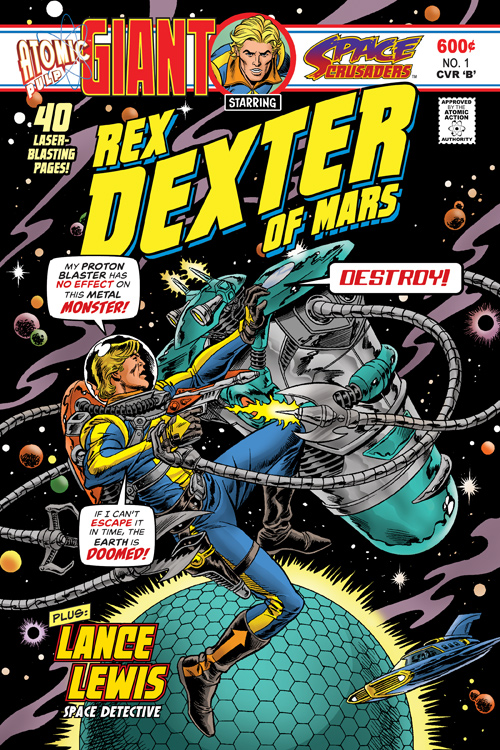

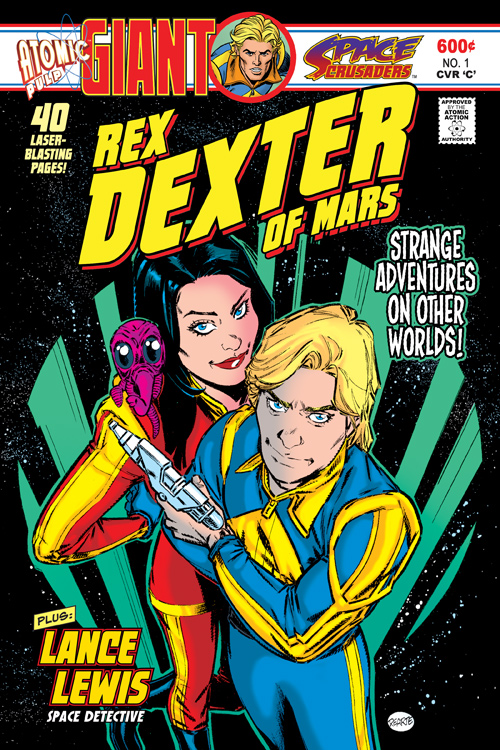

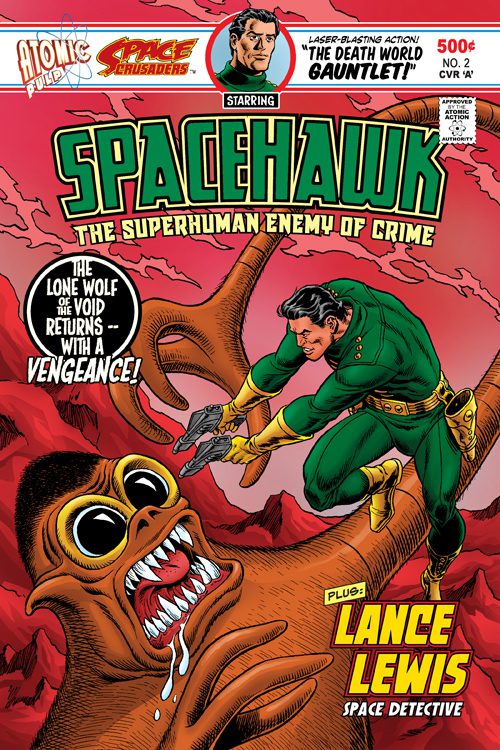

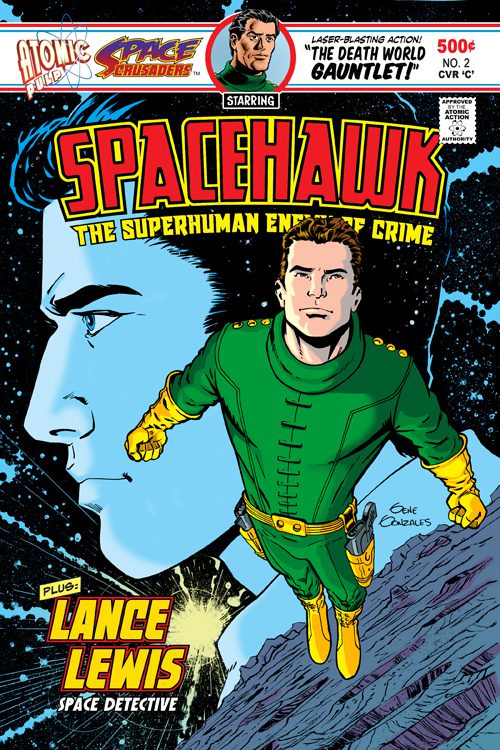

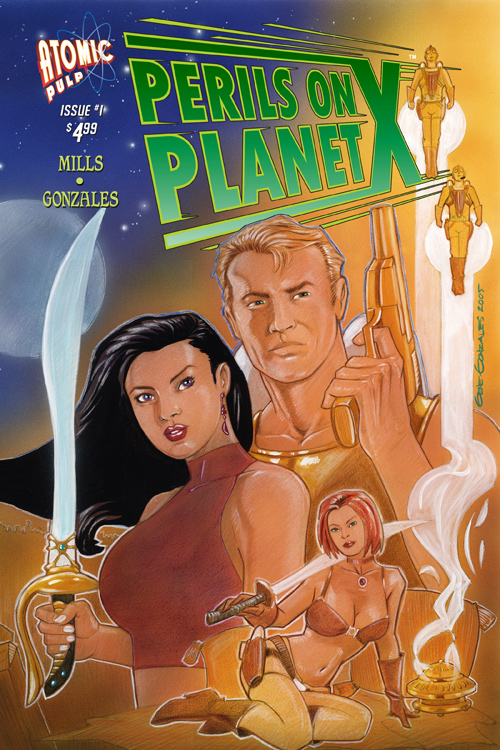

IndyPlanet is a 01 Comics Inc. company. The IndyPlanet logo and mascots are TM and © 01 Comics Inc. All Right reserved. All comics images and graphics are TM and © the respective owners and are used with permission. Printing and Fulfillment by Ka-Blam Digital Printing. Ka-Blam.com